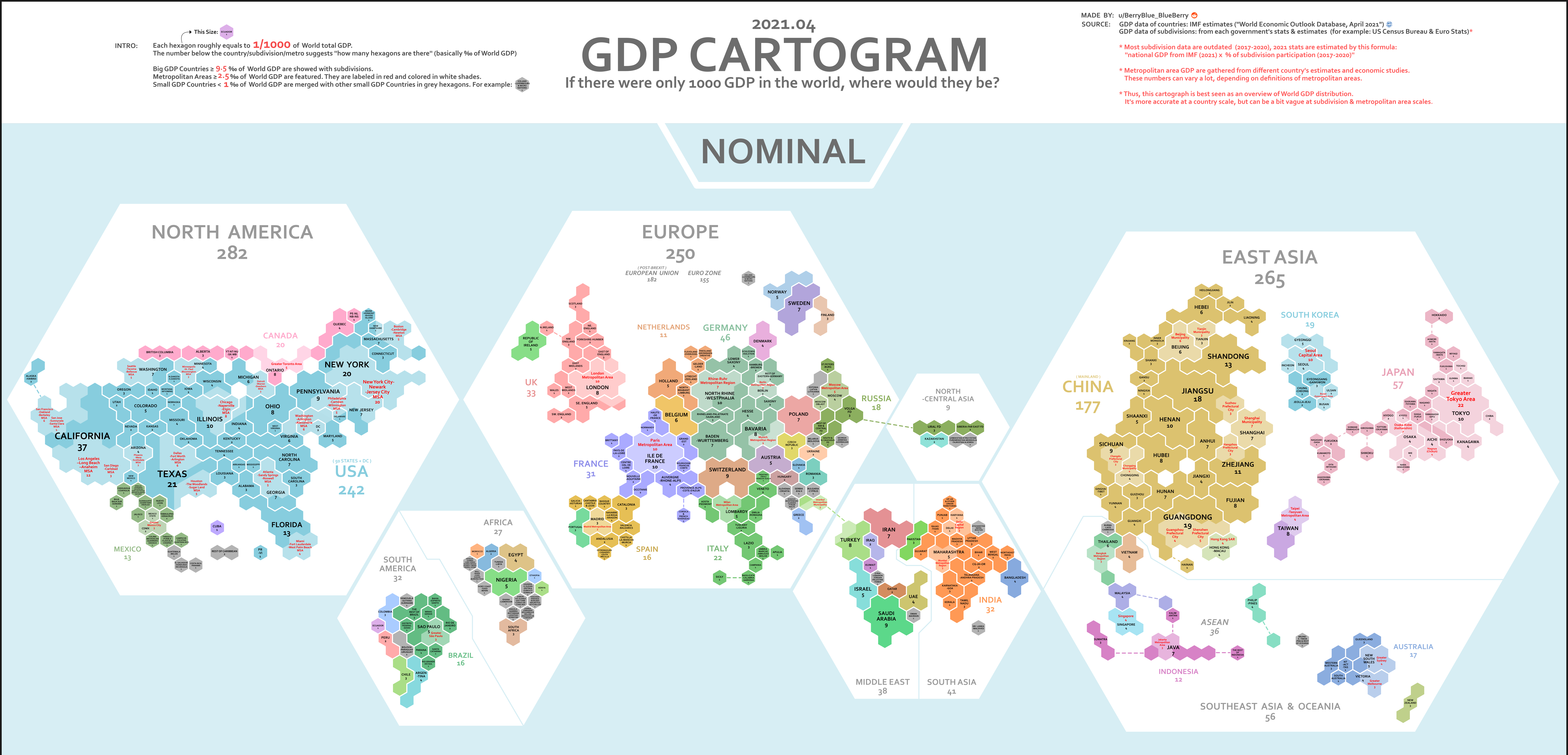

Maps

This GDP Cartogram of the World during 2021 is a general representation of the world's economic output measured by GDP. One hexagon tile is roughly equal to 1/1000 of the world's gdp in 2021. GDP has its faults for measuring prosperity, but it is the total value of goods and services produced in a country and as such can give a rough idea.

Three regions primarily drive the world's economy, those being North America, Europe and East Asia. The USA is the world's largest economy and makes up the majority of North America's GDP. Europe is much more segmented with several nations being significant contributors including, Germany, UK, and France. East Asia's GDP distribution is simpler, with China, the world's 2nd largest economy, and Japan, the worlds 3rd largest economy (fluctuates between Germany and Japan), dominating output.