Evaluating Multisensor-aided Inertial Navigation System (MINS)

1. Introduction

MINS is a Visual Inertial Odometry (VIO) slam system capable of fusing IMU, camera, LiDAR, GPS, and wheel sensors. SLAM (Simultaenous Localization and Mapping) uses Extended Kalman Filters which are Kalman Filters that uses Jacobians to linearize the nonlinear motion around the current estimate in order to use the same Kalman Filter math. A kalman filter roughly makes a guess about where something is, and then corrects it with a sensor reading. In the case of MINs this initial guess is made using the IMU readings and the camera (visual) sensor readings as well as any additional sensors (Lidar, Wheel Encoder) are used to correct this initial guess. My goal was to evaluate MINS on the KAIST Urban Dataset as well as my lab's (at University of Washington) suburban rover dataset. I used kaist2bag to convert the separate sensor bag files to one bag.2. Sensor Calibration

I had to calibrate and configure the system to work with the various sensors in the KAIST setup and the rover setup respectively. The main sensor calibration parameters I had to configure were:- Transformation matrices between sensors T_imu_camera, T_imu-wheel, T_imu_lidar

- Camera intrinsics, resolution

- IMU acceleration and bias, gyroscope acceleration and bias

- Wheel intrinsics (radius, wheel base)

3. Evaluation

MINS already had evaluation code that worked with minimal tuning and setup. Absolute Trajectory Error, a formulation of which can be found below, was used to evaluate the SLAM trajectories. I achieved a 9.12m ATE on an 11km KAIST Urban Trajectory and a 1.1m ATE on our 1km Lab rover trajectory. $$ ATE_{RMSE} = \sqrt{ \frac{1}{N} \sum_{i=1}^{N} \left\| P_{i}^{est} - P_{i}^{gt} \right\|^2 } $$

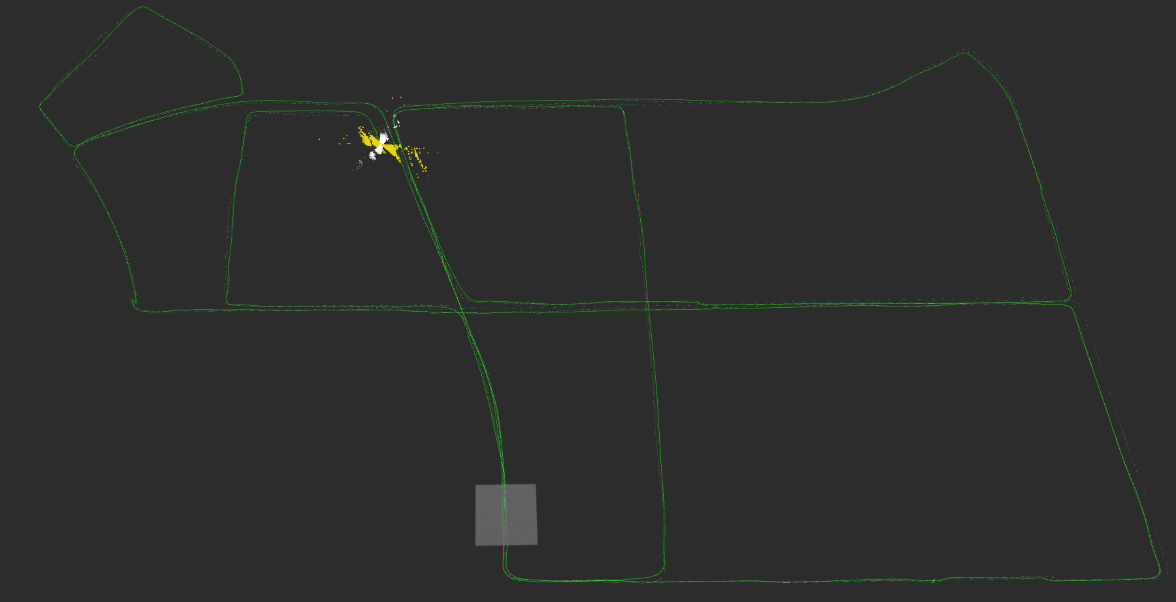

MINS Result on KAIST Urban dataset

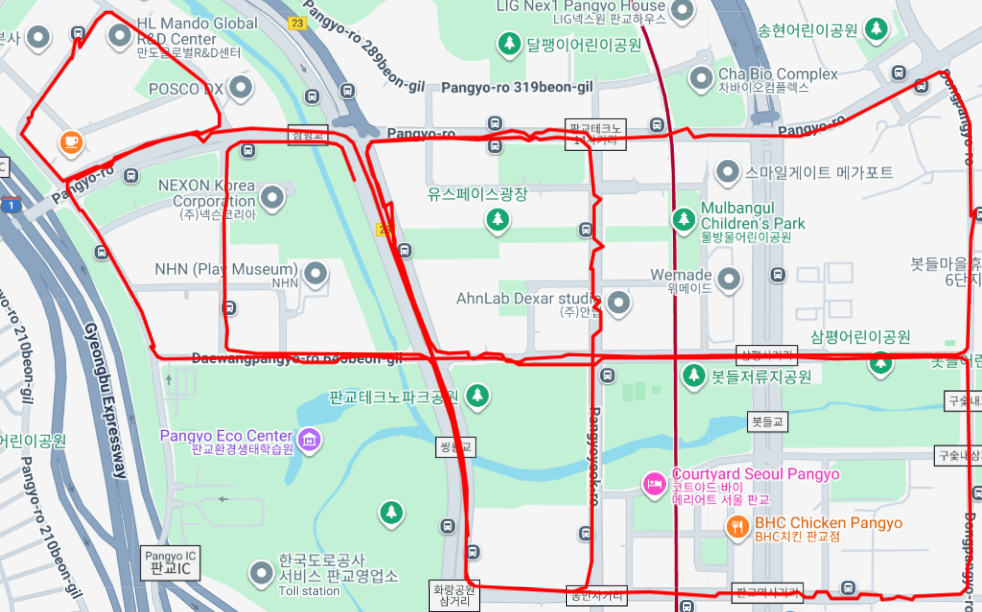

MINS Result on Lab Rover Dataset

Complete MINS Trajectory of KAIST Urban28

Complete Ground Truth Trajectory of KAIST Urban28